I confess I like cooking (although I’m starting to suspect that this is an unrequited love).

Beyond my poor skills, one of the lessons I’ve learned while cooking is that if one does not carefully select quality ingredients, it’s impossible to obtain a tasty dish.

I believe this same principle applies to surveys: without honest participants, it is impossible to obtain reliable results.

On the Internet, assuring the participants honesty is a particularly difficult task, because we only have virtual contact with them.

In order to increase the levels of reliability in a survey it is convenient to:

- Exclude duplicated participants and the ones with a false identity.

- Exclude people that answer in less time than the estimated.

- And, finally, identify and exclude people that answer without paying attention.

One of the ways to identify participants who don’t pay attention, is the well-known red herring questions. A red herring question, or trick question, is a control device inserted in a survey and it requires a specific answer from the respondent in order to let him continue.

There are many types of trick questions, but all of them must comply with these 4 premises or standards:

- Detect a dishonest person, in other words, it is ideal that he or she is not able to go on to the next question by answering randomly.

- Avoid false positives. A trick question … is not a cruel trick!

- It must be a “normal” question, both visually and conceptually, and it must be a question related to the survey contents.

- Do not offend an honest panelist, do not demonstrate doubt in their answer, do not make them feel ashamed.

There are many types of trick questions, let’s review 6 of them:

1. “Obvious answer” questions

These are questions answered not with an opinion, but with an objective fact. For example, What year are we currently in?

2. Consistency test between answers

It consists of including two identical questions in two different parts of the survey, and then contrasting the obtained answers.

3. “Captcha type” control

The respondent must type an specific given code inside a text box in order to move forward.

4. “To go to the next question mark the X option” type control

The respondent must click an specific answer to be able to go to the next question.

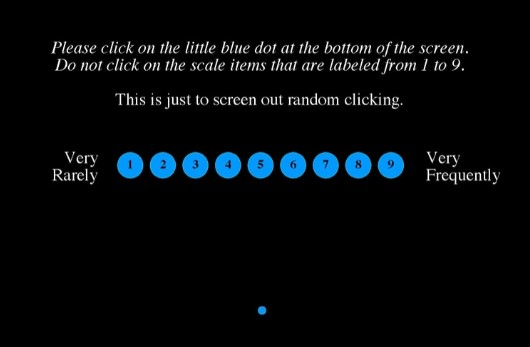

5. IMC (Instructional Manipulation Checks)

A real question is presented to the respondent, but this question comes with an instruction that asks him to do something else, something “unusual”. For example:

6. Unlikely combinations

This question is about asking the respondent what activities, from a large and varied list, did he/she do the past week. We’ll identify as fraudulent the person that marks many of the options, or predetermined combinations of them. For example, it’s extremely improbable that someone went to an NBA game, watched cricket on TV and climbed a mountain...in the same week. This way we can identify people who tend to click all the answer options in order to increase the probability of being selected to participate in the survey.

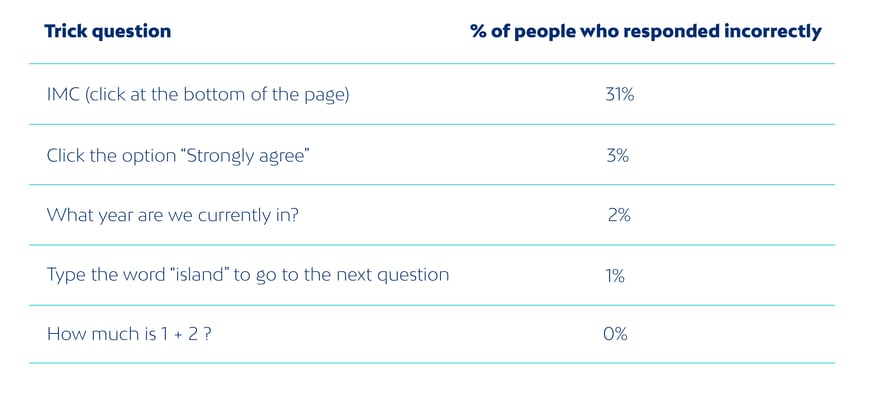

Now, we must note that some trick questions are more efficient than others and that their design can have a great impact on the number of identified panelists. In an internal experiment done at Netquest, we’ve obtained the following results:

In conclusion, there are trick questions that are undemanding, in which few people answer incorrectly; therefore, the obtained results do not change if we exclude them. On the other hand, there are some demanding trick questions that eliminate all kinds of respondents, honest and dishonest, randomly… by these means we do not add quality to our results if we eliminate them.

The strategy we recommend following, particularly in the online panels case, consists of using various trick questions in every survey we manage.

This way, the respondents will end up paying attention to every text we present to them. Anyone can make a mistake, but the person who answers incorrectly repeatedly, must be eliminated from our online community.

Finally, we encourage you to complement this strategy putting into practice some of the main premises in the field of the behavioral economics in order to increase the respondents’ honesty, and to download our ebook on the essentials of online data collection.